Wed 16 October 2019

We have been exploring moving some of workloads to serverless infrastructure for some time, initially we considered AWS Lambda as the go to solution, but support for Container out of the box by Cloud Run was an attractive option as it lowered the cost of moving existing containers.

Initially we considered moving our non-static websites first, here are what we learned using it and why we decided it is not yet ready for prime time.

Moving Workloads

If your containers are already stored on GCR, setting Cloud Run is very straight forward. Code had to be adapted to

listen on the $PORT set by Cloud Run. This turned out to be more complicated than anticipated as we are using

Nginx and its configuration do not support environment variables in configuration files.

The options were either using a scripting language like LUA or PERL to create an Nginx variable from the environment

variable or using envsubst to transform a configuration template substituting environment variable values.

Encrypted Traffic

One clear advantage of Cloud Run is that you no longer need to configure you TLS/SSL endpoint as it is taken care of by Cloud Run.

This offers an increased security as you no longer need to keep up to date with TLS setting best practices, what protocols to support etc. This however also reduces your leeway as you might want to have a strict policy or support old browsers.

In these cases, managed TLS configuration with no possibility to configure it is a big issue.

Connecting to External Services

Once the service ws up and running, it kept complaining about SQL database connection. To our surprise, Cloud Run supports connection to internal Google Services only. A database not running on GCP is not supported.

This is also limits the usefulness of Cloud Run for several use cases that requires outgoing connection. If you really need that, running Cloud Run on a dedicated GKE is possible, but the setting was so complex and the documentation not clear that we simply gave up.

To continue testing, we ended up setting a database on GCP using Cloud SQL. SQL connection is however done using socket

files mounted to the container at /cloudsql/.

Setting this also required changes to the code, but was very straightforward, the real fun came when we had to deal with the IAM policy error and missing APIs. Not fun to go through, but once you get it working, reusing the same service account to connect to the database is no op and clearly more secure.

Scaling

Once Cloud Run setup and DB connection working, came the moment of truth, how well does scale and perform.

Unleashing thousands of connections on the service worked marvelously reaching 1k QPS and spiking to 1.6k QPS.

Scaling was pleasantly surprising and worked out of the box, you might hit a bottleneck to the database if underprovisionned.

Latency

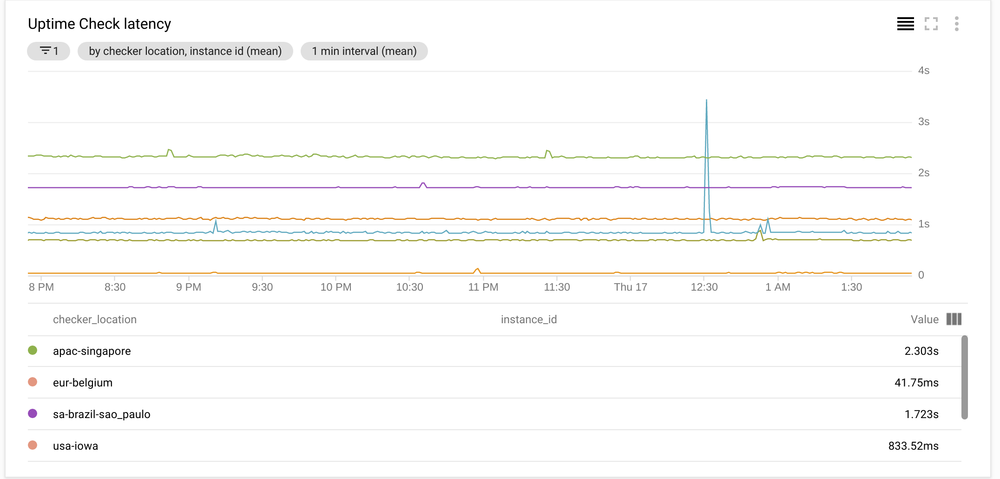

Latency was unfortunately the deal breaker. Maintaining a relatively low latency for our websites was important to offer a good user experience.

Current latency varied between 40 ms to 2000ms on average with 3000 ms on the 95th percentile.

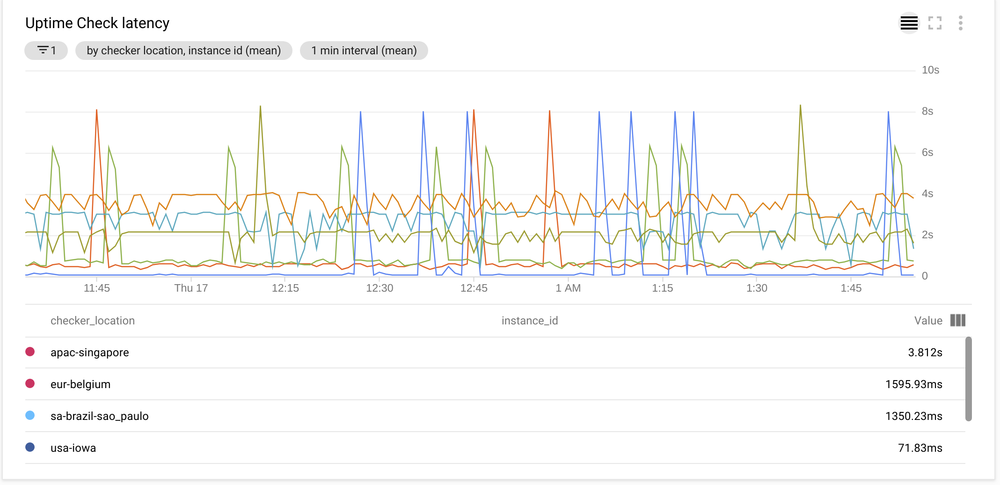

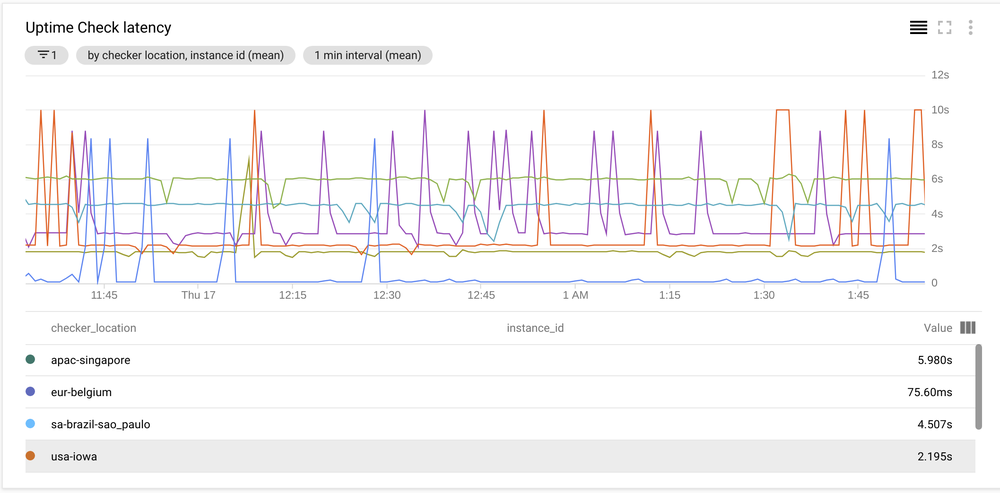

Cloud Run latency was just horrible at 4000ms to 8000ms. We could see latency go down after a cold start, but even then the values it achieved were high between 600ms to 4000ms and spiking back to 8000ms very often.

Verdict

Cloud Run looked very promising and clearly has great potential because of its out of the box support for Containers. Its integration with Cloud Build also seems like a big plus to automate deployments.

No support for outbound connection limits its usefulness and integration scenarios, I am assuming this is done to avoid abusing the service to run DDoS attacks.

While scaling numbers are impressive, latency is very problematic, which limits its usefulness to run websites or even APIs.

Using the service for non-latency sensitive work is still an option, as long as it doesn't require outbound connection.