Mon 22 January 2018

I will be sharing through a series of blog posts our past experimentations with the use of reinforcement learning for automated testing, to both chase bugs and find vulnerabilities.

Our initial goal was to build a generic intelligent approach to identify vulnerabilities in mobile applications, targeting initially Android Java based applications and iOS LLVM bitcode based applications. Our experimentation lead us to learn about reinforcement learning and to use it for what seemed to give very interesting results.

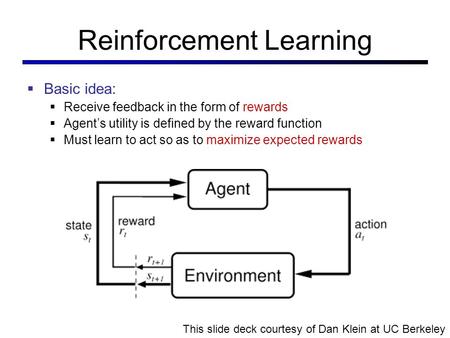

For the unfamiliar with reinforcement learning, it is a a branch of machine learning that relies on an input loop to continuously enhance results.

Reinforcement learning was for instance used by the AlphaGo project, that used a specialized form of reinforcement learning called deep reinforcement learning and that, as the name suggests, uses deep learning.

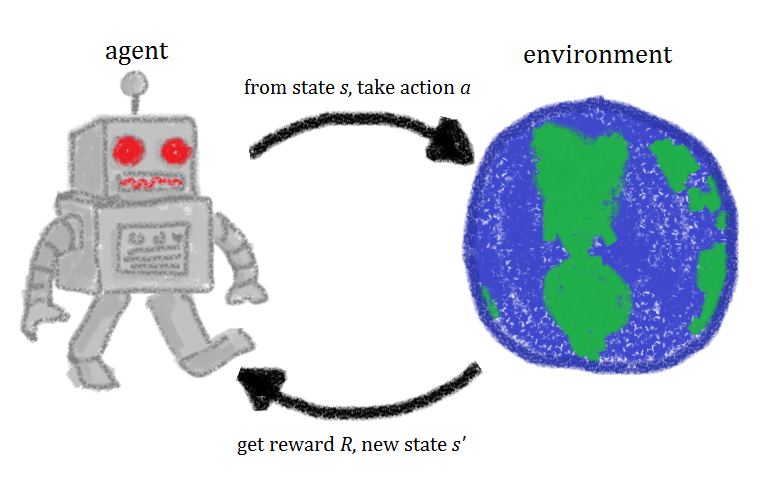

Reinforcement learning is in reality quite simple and intuitive. An agent performs an action that he sends to an environment, he then collects information about the new state and action outcome (referred to as reward or punishment), and finally computes a new action based on that output. The input is computed using an algorithm that is referred to as a Policy.

Though the use of reinforcement learning for security testing AFAIK has never been mentioned before, except for 2 very recent academic papers that claims to be the first ones doing so; in reality reinforcement learning has existed for quite some time in some security testing tools and has already proven to produce amazing results. AFL by Michal Zalewsky is the best example that has found a staggering number of vulnerabilities.

AFL is commonly referred to as an evolutionary fuzzer. It generates a test case that gets passed to an instrumented program, then collects the execution trace and generates new inputs that aims at increasing code coverage within the tested program.

Evolutionary fuzzing in general needs to solve 3 problems:

1- Fast Instrumentation

2- Smart Code Coverage Algorithm

3- Efficient Vulnerability Identification

Though the first (Fast Instrumentation) and last (Efficient Vulnerability Identification) problems have nothing to do with reinforcement learning, I find them so interesting that I believe are worthy of getting some explanation.

Instrumentation consists simply of tracing a program execution, the principle is simple, but the execution is notoriously complex. Instrumentation can have several levels of granularity, per function call, per block or even per instruction. The higher the granularity is, the slower it gets. There are different ways to instrument a program: - Compile-time, which simply delegates the task to the compiler to add instrumentation instructions. Initially it was the fastest approach, as the compiler have better understanding of the program, but most importantly the compiler is able to run his optimizations on the instrumented code. - Software-based run-time, this approach is adapted when we don't have access to the source code of the program. This is by far the slowest approach as it requires constant jumping between the instrumented code and the instrumentation code. It is also very error prone and difficult to get right. - Hardware-based run-time, which is my favorite approach as it brings the best of both worlds; low overhead and no need for source code. Intel and ARM added support in their processors for program tracing with very low overhead and both AFL and HonggFuzz for instance have support for using hardware-based instrumentation.

The efficient vulnerability identification is yet another complex subject. Initially most fuzzers relied on the program to crash as a sign for a potential vulnerability. However crashes may not occur if for instance the overflow is too small to overwrite anything interesting.

More advanced approach is the one used by sanitizers. LLVM sanitizers perform compile time modifications to a program to make the triggering fact of a vulnerability more apparent, like the use of memory guards that wraps every memory allocation.

All of these approaches are solely adapted for low level languages hunting low level vulnerabilities, like overflows or use-after-free.

The 2nd component of evolutionary fuzzers is the algorithm to increase the code coverage and this is where the reinforcement part happens.

AFL uses genetic algorithms to generate input and relies on the block based instrumentation to identify if an input was capable of triggering a new path in the program.

Genetic algorithms aim at imitating natural selection which consists of producing input, running a set of modifications (crossover and mutation) and selecting from that generated population a subset that passes a fitness function .

In the case of AFL genetic algorithm:

- crossover operation consists for instance of block switching between inputs;

- mutation operation consists for instance of bit flipping;

- fitness function measures the discovery of a new execution path.

AFL also adds an element of curiosity or an exploration bonus by privileging input that triggers new execution path. Genetic algorithms and exploration bonuses are commonly used in modern reinforcement learning solutions.

Other approaches, that predate AFL genetic algorithm, consist of using SMT and SAT solvers. This approach requires a highly granular instrumentation and attempts to solve complex equations to discover a new execution branch.

SMT solvers have known huge progress in the recent years, but other than the non-public SAGE, there is no fuzzer that has reported good results with this approach.

Other fuzzers tries a combination of multiple techniques to build on the strengths of both approaches. Driller for instance that won the 2nd place at the DARPA Cyber Grand challenge used both AFL, a modified Qemu and Z3 SMT solver.

In the next blog posts I will dive into some limitations of these approaches and present the use reinforcement learning for identifying high level vulnerabilities like SQLi, Command Injection and XXE.