Tue 18 April 2023

Python is a popular programming language that has gained immense popularity due to its ease of use, simplicity, and readability. However, its dynamic nature makes it relatively slow compared to other languages.

The performance of Python code can become a problem when dealing with large datasets, computationally intensive tasks, or real-time applications that require quick responses. Slow code can also increase the cost of running applications in cloud environments, where computing resources are charged based on usage.

Therefore, developers are constantly seeking ways to make their Python code run faster. In this article, we will explore strategies to write super-fast Python code.

This article explores different ways developers can make their Python code super fast, from taking advantage of built-in APIs/modules to third-party libraries/tools.

For comparison purposes, we’ll use a base script and compare its performance against the various tools or techniques discussed in the article. The script simply downloads images from Unsplash using a list of image URLs.

# base.py

import requests

IMG_URLS = [

'https://images.unsplash.com/photo-1681139504760-4c17f2c8b380',

'https://images.unsplash.com/photo-1661956601031-4cf09efadfce',

'https://images.unsplash.com/photo-1681138279775-2b407d5d962a',

'https://images.unsplash.com/photo-1530224264768-7ff8c1789d79',

'https://images.unsplash.com/photo-1564135624576-c5c88640f235',

'https://images.unsplash.com/photo-1541698444083-023c97d3f4b6',

'https://images.unsplash.com/photo-1522364723953-452d3431c267',

'https://images.unsplash.com/photo-1513938709626-033611b8cc03',

'https://images.unsplash.com/photo-1507143550189-fed454f93097',

'https://images.unsplash.com/photo-1504198453319-5ce911bafcde',

'https://images.unsplash.com/photo-1661956602116-aa6865609028',

'https://images.unsplash.com/photo-1516972810927-80185027ca84',

'https://images.unsplash.com/photo-1681105225329-2372f21a59a8',

'https://images.unsplash.com/photo-1661956601349-f61c959a8fd4'

]

def download_images() -> None:

for img_url in IMG_URLS:

img_bytes = requests.get(img_url).content

img_name = img_url.split('/')[3]

img_name = f'{img_name}.jpg'

with open(img_name, 'wb') as img_file:

img_file.write(img_bytes)

print(f'{img_name} was downloaded...')

The script is normal Python code that has not been optimised.

The benchmark script:

# benchmark.py

from timeit import timeit

t1 = timeit(

"download_images()",

setup="from base import download_images",

number=1

)

print(f'Finished running base script in {t1} seconds')

t2 = timeit(

"run_download_images()",

setup="from threads import run_download_images",

number=1

)

print(f'Finished running threads script in {t2} seconds')

print(f"Base: {t1:.3f} seconds")

print(f"Threading: {t2:.3f} seconds")

print(f"Threading is {t1 / t2:.3f}x faster!")

The benchmark.py script will be used to benchmark the performance of our scripts. The setup (‘from threads import run_download_images’ and ‘from base import download_images’) and stmt (‘run_download_images()’ and ‘download_images()’) will change depending on what we’re testing.

1. Newer versions of Python

Running newer versions of Python, especially Python 3.11 (as of 2023), brings several performance improvements over the previous versions of Python. According to the Python documentation, Python 3.11 is between 10-60% faster than Python 3.10, and on average is about 1.25x faster than Python 3.10.

2. Threads

Python’s Thread API provides a simple and efficient way of creating and managing threads. With threads, developers can run multiple tasks simultaneously, leading to faster code execution. Threads are ideal for I/O bound tasks, meaning tasks that involve a lot of input and output operations, such as reading/writing from the file system, making API requests, and downloading data. This is because threads still use one process to execute code and therefore while they are good for doing more tasks, they’re not ideal for speed (e.g. when doing CPU-bound tasks, i.e. tasks that require a lot of computation).

The Thread Module

The thread module in Python provides a simple and easy-to-use interface for creating and managing threads. In Python, threads are created using the threading module, which provides classes for creating and managing threads.

Leveraging Multiple Cores using Threads

One of the main advantages of using threads is that they enable a program to take advantage of multiple cores in a CPU. By running multiple threads simultaneously, developers can utilize the processing power of multiple cores and achieve faster execution times. To take advantage of multiple cores with threads, developers need to ensure that their code is designed to run concurrently. This means that the threads should perform independent tasks, without interfering with each other. If the threads need to share resources, such as variables or data structures, developers need to ensure that the access to these resources is thread-safe.

Let’s see how we can convert the script in base.py into one that uses threads.

# threads.py

import requests

import uuid

import concurrent.futures

import base # base.py

def download_image(img_url: str) -> None:

img_bytes = requests.get(img_url).content

img_name = f"{img_url.split('/')[3]}{uuid.uuid4()}"

img_name = f'{img_name}.jpg'

with open(img_name, 'wb') as img_file:

img_file.write(img_bytes)

print(f'{img_name} was downloaded...')

def run_download_images():

with concurrent.futures.ThreadPoolExecutor() as executor:

executor.map(download_image, base.IMG_URLS)

The code above simply creates a ThreadPoolExecuter that takes care of handling the threads.

Performance of threads.py vs base.py:

Run the benchmark.py:

python3.10 benchmark.py

We can see that using threads allowed us to download the images ~1.7x faster than normal code.

Thread Safety and the GIL

Thread safety is a critical consideration when working with threads in Python. When multiple threads access shared resources, such as variables or data structures, they can create race conditions, which can lead to unexpected results. To ensure thread safety, developers need to use synchronization mechanisms such as locks, semaphores, and barriers. These mechanisms ensure that only one thread can access a shared resource at a time, preventing race conditions.

Developers also need to be aware of the global interpreter lock (GIL), which is a mechanism used by Python to ensure that only one thread can execute Python bytecode at a time. While the GIL can limit the performance of threaded programs, it is essential for ensuring the consistency and correctness of Python programs.

3. Multiprocessing

Multiprocessing is another way to take advantage of multiple cores in a CPU. Unlike threads, multiprocessing uses separate processes instead of threads. Each process runs in its own memory space, making it ideal for both CPU and I/O-bound tasks that require a lot of processing power. Multiprocessing is easy to use in Python, thanks to the multiprocessing module. Developers can spawn multiple processes to run simultaneously, thus making their code run faster.

# multiprocessing_example.py

import uuid

import multiprocessing

import requests

import concurrent.futures

import base

def download_image(img_url: str) -> None:

img_bytes = requests.get(img_url).content

img_name = f"{img_url.split('/')[3]}{uuid.uuid4()}"

img_name = f'{img_name}.jpg'

with open(img_name, 'wb') as img_file:

img_file.write(img_bytes)

print(f'{img_name} was downloaded...')

def run_download_images() -> None:

with concurrent.futures.ProcessPoolExecutor() as executor:

executor.map(download_image, base.IMG_URLS)

print(f"Using {len(multiprocessing.active_children())}/{multiprocessing.cpu_count()} CPUs")

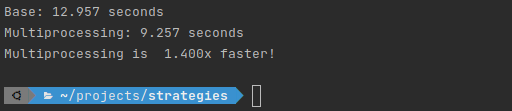

Performance of multiprocessing_example.py vs base.py:

Run the benchmark.py:

python3.10 benchmark.py

We can see we did get some performance benefits using multiprocessing. Even though multiprocessing can be used for I/O bound tasks, it’s better suited for CPU-bound tasks.

4. Asyncio

Python's Asyncio module allows concurrent code to be written using the async/await syntax. Asyncio enables programmers to create non-blocking code capable of handling numerous jobs at once without the use of multiple threads or processes. I/O-bound tasks, such as network requests, that necessitate waiting for external resources to react, are best suited for asyncio. Developers can create concurrent code without worrying about low-level details thanks to the simplicity of asyncio, even though debugging issues can be hard sometimes.

# asyncio_example.py

import asyncio

import uuid

import aiohttp # the requests library is synchronous, and so we'll use `aiohttp` which works better with asyncio

import base

async def download_image(img_url: str) -> None:

async with aiohttp.ClientSession() as session:

async with session.get(img_url) as response:

img_bytes = await response.read()

img_name = f"{img_url.split('/')[3]}{uuid.uuid4()}"

img_name = f'{img_name}.jpg'

with open(img_name, 'wb') as img_file:

img_file.write(img_bytes)

print(f'{img_name} was downloaded...')

async def run_download_images() -> None:

await asyncio.gather(*[download_image(url) for url in base.IMG_URLS])

def download_images_async() -> None:

asyncio.run(run_download_images())

Performance of asyncio_example.py vs base.py:

Run the benchmark.py:

python3.10 benchmark.py

The asyncio_example.py script took ~9.32862888701493 seconds to run, ~1.568x faster than the base.py script (~14.631273603008594 seconds).

5. Parallelism with Cython

Developers can create Python code that can be converted to highly optimized C or C++ code using Cython, a superset of Python. Cython uses the speed of C to enable the creation of high-performance Python programs. With Cython, programmers can create Python code that can be quickly converted to C. For tasks that are CPU-bound and demand a lot of processing capacity, Cython is perfect. Python code can be written using Cython syntax and then compiled into C to make it execute more quickly.

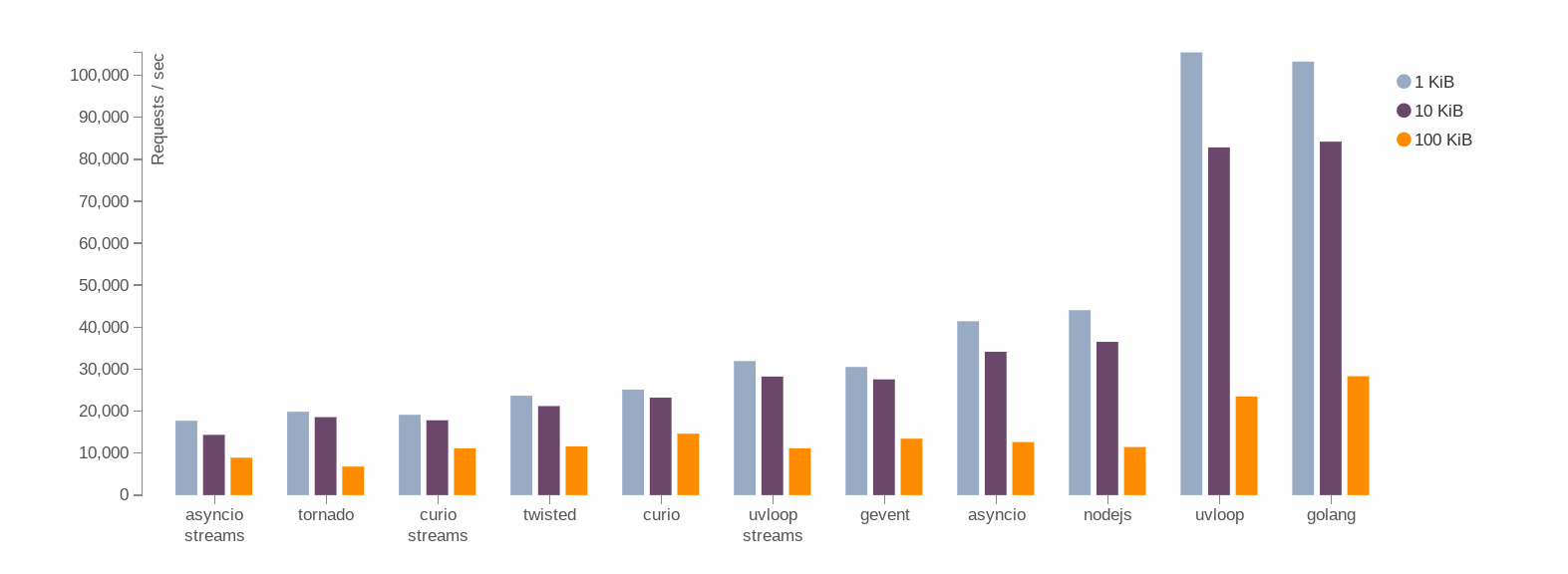

A practical example of a project that has used Cython to speed up Python is uvloop, an ultra fast asyncio event loop. On average, uvloop makes asyncio 2-4x faster.

Using Cython

In the same way that Python code is written in .py files, Cython code is written in.pyx files.

Type Declarations

One of the main benefits of Cython is the ability to declare the types of variables, function arguments, and return types. This allows the Cython compiler to generate highly optimized C code. For example:

# base.py

import time

def fib(n: int) -> int:

if n <= 1:

return n

else:

return fib(n - 2) + fib(n - 1)

t0 = time.time()

fib(32)

print(f"Time: {time.time() - t0}")

The type of n and the return type are declared as int. This allows the Cython compiler to generate highly optimized C code for the function.

Run the script using using the command shell python3.10 base.py. It takes ~0.37893152236938477 seconds for the function to run.

Now to test our Cython code, copy the script in base.py to a new file called base_cython.pyx

Next, we’ll create a setup.py file with the following code:

from setuptools import setup

from Cython.Build import cythonize

setup(

name='Base script',

ext_modules=cythonize("base_cython.pyx"),

zip_safe=False,

)

To build, run shell python setup.py build_ext --inplace.

Run the script using by starting a python shell and do shell from base import fib. It takes ~0.09149384498596191 seconds to run. Using Cython, we can see that we gained a performance benefit or ~4x as compared to the pure python code.

Using C Libraries

Cython allows developers to use C libraries directly in their Python code. This can result in significant performance improvements, especially for computationally intensive tasks. For example:

cdef extern from "math.h":

double sin(double)

def compute_sine(double x):

return sin(x)

The sin function from the math.h C library is used directly in the Python code. This can result in significant performance improvements over the Python math library.

Memory Management

Cython allows developers to manage memory directly, which can result in significant performance improvements. For example:

cdef int *arr = <int *>malloc(sizeof(int) * n)

for i in range(n):

arr[i] = i

free(arr)

In the above example, memory is allocated using the malloc function from the C standard library. The free function is used to release the memory after it is no longer needed.

6. Mypyc

Mypyc is a static compiler for Python that generates C code from Python modules. It is a tool that can be used to optimize and speed up Python code. Mypyc compiles Python code to machine code, which makes it faster than interpreted Python code. It is compatible with Python 3.5 and above.

To use mypyc, you need to install mypy (link to the docs)

Since Mypyc utilises static typing, we’ll change our base script to use a simple fibonacci function that is typed:

# base.py

import time

def fib(n: int) -> int:

if n <= 1:

return n

else:

return fib(n - 2) + fib(n - 1)

t0 = time.time()

fib(32)

print(f"Time: {time.time() - t0}")

Run the script using the command shell python3.10 base.py. It takes ~0.37893152236938477 seconds for the function to run.

To compile our code, we’ll first check for any typing issues in our code.

mypy base.py

Next, we’ll compile the program to a binary C extension.

mypyc base.py

To test our compiled code, we’ll use the command shell python3.10 -c "import base", which takes about ~0.018268108367919922 seconds to run. Using mypyc, we can see that we gained a performance benefit or ~20x as compared to the pure python code.

We use python3.10 -c to run the compiled module as a program.

7. Pypy

Pypy is an alternative implementation of the Python language. It is a Just-In-Time (JIT) compiler for Python, which means that it compiles Python code to machine code on the fly. Pypy is compatible with Python 2.7 and Python 3.6.

Pypy is known for its excellent performance on CPU-bound tasks. It has several features that make it a powerful tool for optimizing Python code. For example, it uses a technique called "JIT specialization" to generate optimized machine code for frequently executed parts of the code.

You can install Pypy from this link

Let's use Pypy to run our Fibonacci script:

pypy3 base.py

The script takes ~0.02586054801940918 seconds to run, ~14.6x faster than using running it with python3.10 which took about 0.37893152236938477 seconds to run.

8. Mamba

Mamba is a Python package manager that is designed to be faster than the default Python package manager, pip. It uses the conda package format and can be used with the Anaconda distribution of Python. Whether you notice any performance benefit or not will depend on each package because some packages that you install with Mamba might give you the same performance as those installed using pip.

Numpy is one of the packages that is optimised when using Mamba. To install and set it up, follow the documentation. We'll change our base script to:

import numpy as np

import time

arr1 = np.random.rand(1000000)

arr2 = np.random.rand(1000000)

t0 = time.time()

result = np.dot(arr1, arr2)

print(f"Time taken using mamba: {time.time() - t0}")

Performance of pip vs mamba:

Install numpy using pip and run the base script as following:

# install

pip install numpy

# run

python3.10 base.py

The script takes ~0.004205226898193359 seconds to run.

Let's install numpy using mamba:

# install

mamba install numpy

# run

python3.10 base.py

This time, the script takes ~0.0014407634735107422 to run, which is ~2.92x faster than pip.

It is important to note that if the package(s) you're using is not highly optimised in mamba, you'll notice very small performance gains.

9. Native Code

Native code refers to machine code that is executed directly by a computer's processor. Native code is typically generated by a compiler that translates high-level programming languages into machine code. Native code is faster and more efficient than interpreted code, such as Python code, because it does not need to be translated at runtime.

In Python, native code can be generated using tools such as MyPyC and Cython, which compile Python code into native code. Native code can also be generated using other languages, such as C or C++, which can be called from Python using extension modules.

One advantage of native code is that it is faster and more efficient than interpreted code. Native code can execute faster because it is executed directly by the processor without the need for interpretation. Additionally, native code can be optimized for a specific processor architecture, which can further improve performance.

However, one disadvantage of native code is that it is typically more difficult to write and debug compared to interpreted code. Native code requires more low-level programming knowledge and can be more difficult to debug because it is executed directly by the processor.

10. Codon

Codon is a Python library that provides an easy way to generate and execute native code at runtime. Codon allows developers to write high-level Python code that is translated into native code at runtime, which can be faster and more efficient than interpreted Python code.

Codon uses Just-in-Time (JIT) compilation to generate native code. JIT compilation is a technique where code is compiled at runtime instead of ahead of time. This allows the compiler to generate optimized code that takes into account the specific runtime environment.

To use codon, you first have to install it from this link.

We can run our Fibonacci base.py script using the following command:

codon run -release base.py

Using codon, our script took only ~0.00603247 seconds, which is ~52.48 faster than using Python3.10 (~0.32192111015319824 seconds)

One advantage of Codon is that it provides an easy way to generate native code without the need for additional tools, such as MyPyC or Cython. Codon also provides a high-level API that allows developers to write code in Python, without needing to learn a new language or syntax.

However, one disadvantage of Codon is that it may not be suitable for all use cases. Codon is best suited for code that can benefit from JIT compilation, such as numerical computation or machine learning. Additionally, Codon may not be as fast as other native code generation tools, such as MyPyC or Cython, for certain use cases.

Benchmarks

| Tool | Average Time (secs) | Base Script | Performance |

|---|---|---|---|

| Python3.10 | ~15.19 | base.py (Async) |

- |

| Threads | ~10.015 | base.py (Async) |

~1.52x |

| Multiprocessing | ~9.257 | base.py (Async) |

~1.64x |

| Asyncio | ~9.32 | base.py (Async) |

~1.63x |

| - | - | - | - |

| Python3.11 | ~0.21 | base.py (Fibonacci) |

~1.65x |

| Cython | ~0.09 | base.py (Fibonacci) |

~3.99x |

| Mypyc | ~0.018 | base.py (Fibonacci) |

~19.99x |

| Pypy | ~0.025 | base.py (Fibonacci) |

~14.12x |

| Codon | ~0.006 | base.py (Fibonacci) |

~52.48x |

| - | - | - | - |

| PiP | ~0.004205 | base.py (Numpy) |

- |

| Mamba | ~0.001440 | base.py (Numpy) |

~2.92x vs pip |

In conclusion, there are various strategies and tools that can be used to optimize the performance of Python code. While native code and Cython can significantly speed up computationally intensive activities, multithreading and multiprocessing enable better utilisation of system resources. Installing programmes that are optimised for scientific computing with the Mamba package manager is also possible. The approach/tool that you decide to use will ultimately depend on what your code is trying to achieve.

It's crucial to remember, though, that readability and maintainability shouldn't suffer in the name of speed optimisation. Even if it means giving up some performance benefits, we should as developers aim to create clear, concise, and simple-to-understand code.