Mon 24 February 2025

From Moonshot to Production: Building Ostorlab Copilot

What is Agentic AI? A New Era of Intelligent Assistance

Agentic AI marks a transformative shift in artificial intelligence, moving beyond passive responses to autonomous action, continuous learning, and informed decision-making. These AI agents leverage structured tools, contextual awareness, and reasoning capabilities to deliver intelligent, adaptive, and task-specific assistance.

At Ostorlab, we recognized the potential of agentic AI early on and have been integrating it into our platform to enhance security operations and user experience.

Our goal is to enable AI-driven automation, guided decision-making, and interactive support.

Inspired by tools like GitHub Copilot and Microsoft Copilot—both of which showcase how AI can boost productivity and streamline workflows through intuitive UI interactions—we developed AI agents that provide seamless, intelligent assistance while maintaining a fluid and user-friendly interface.

For example, when using Ostorlab’s attack surface management, organizations often need to analyze hundreds or even thousands of potential assets. Manually verifying each asset to confirm or discard ownership is time-consuming and tedious. By leveraging a large language model (LLM), we can automate this process, reducing hours of manual work to zero.

Instead of reviewing assets one by one, users can simply instruct the LLM to confirm all assets that meet specific criteria, making validation faster, more efficient, and fully automated.

Ostorlab’s Vision: AI-Powered Security Assistance

Ostorlab Copilot is an AI-driven assistant designed to answer security and platform-related questions, help manage your organization, and execute tasks on your behalf, such as creating scans and managing tickets.

By leveraging large language models and integrated tools, Copilot provides an efficient, and user-friendly solution for handling daily operations, ultimately streamlining and enhancing your workflow.

This article outlines our journey in implementing this feature, the challenges we encountered, and the lessons we learned along the way.

Moonshot Week: The Genesis of Copilot

Moonshot Week is an internal event where we dedicate a full week to exploring bold, ambitious ideas—concepts that may seem far-fetched, difficult, or even impossible to implement.

During one such week, the idea of Ostorlab copilot was initially suggested by several team members who decided to collaborate on building a working prototype.

Before this, our team had already gained experience with agentic AI frameworks like CrewAI and LangChain, so we set our sights on leveraging them for this experiment.

To our surprise, within just a few days, we had a proof of concept (PoC) up and running. Not only did we build a fully functional UI, but we also enabled the AI to answer questions based on documentation and interact with the platform. The Moonshot was a clear success.

With the promising results, we decided to move forward with the idea. The initial PoC was discarded, and a new implementation was designed from the ground up. However, little did we know the challenges that lay ahead—what worked for a single, simple task in a controlled environment had to be reimagined to scale efficiently and cover significantly more use cases. The journey had only just begun.

Tech Stack and Challenges

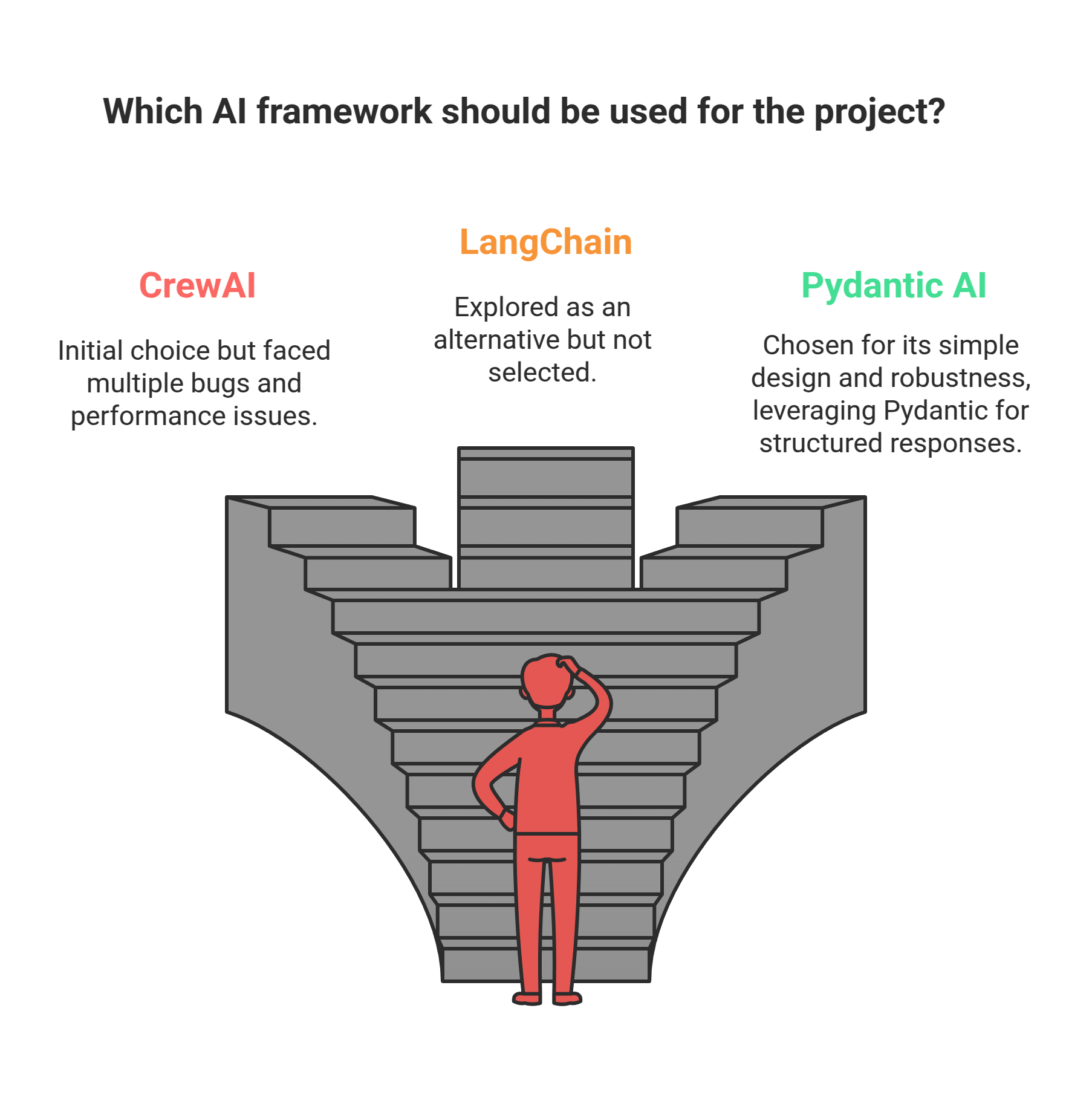

Frameworks: CrewAI vs. PydanticAI vs. LangChain

During Moonshot Week, our initial proof of concept (PoC) was built using CrewAI. However, we encountered multiple bugs and performance issues. We then explored LangChain as an alternative but ultimately chose PydanticAI for its simplicity and robustness.

PydanticAI stood out as the best choice for our use case. It leverages Pydantic to generate structured, typed responses when interacting with the LLM, ensuring a more reliable and efficient experience.

Example of Agent Declaration

from pydantic_ai import Agent

security_agent = Agent(

'openai:gpt-4o',

deps_type=int,

result_type=str,

system_prompt='Provides security insights and best practices.'

)

Enforcing Structured, Typed Responses

A key strength of PydanticAI is its ability to enforce structured and formatted responses. By utilizing Pydantic's validation, the agent ensures that outputs adhere to predefined data types, making them easier to parse and integrate into applications.

Example: Structured Output with Pydantic

from pydantic import BaseModel

from pydantic_ai import Agent

class CityLocation(BaseModel):

city: str

country: str

agent = Agent('google-gla:gemini-1.5-flash', result_type=CityLocation)

result = agent.run_sync('Where were the Olympics held in 2012?')

print(result.data)

#> city='London' country='United Kingdom'

Tool Integration in PydanticAI

One of the most powerful features of PydanticAI is its ability to integrate tools—simple Python functions that the agent can call and utilize. However, this functionality depends on the model’s capability to support tool usage, and not all LLMs provide this feature.

Example: Defining a Tool

@security_agent.tool

def fetch_vulnerability_data(vuln_name: str) -> str:

return f"Details for vulnerability {vuln_name}: ..."

result = security_agent.run_sync('What is SQL injection?', deps=success_number)

print(result.data)

By combining structured outputs and tool integration, PydanticAI provides a robust framework for building AI-driven applications with greater reliability and control.

Benchmarking LLM Models

While working on the project, we kept benchmarking and testing multiple LLMs and determining what we could and couldn't do. We aimed to find the best balance between speed, quality, and cost. Another key factor in our decision was the ability of an LLM to support JSON responses and tool-calling capabilities.

1. Scenario Definition

Each test scenario is meticulously defined with:

- A unique identifier

- Target model (e.g.,

gemini-2.0-flash-001orgpt-4o-mini) - System prompt (Technical, Simple, or Security-focused)

- User Prompt

- Expected output

- Similarity threshold for evaluation

2. Execution Engine

Responsible for executing test scenarios, measuring response time, tracking token usage, and evaluating response quality using semantic similarity metrics.

3. Analysis System

Provides in-depth analysis, including:

- Semantic similarity calculations to gauge response accuracy

- Token consumption tracking to optimize resource usage

- Comprehensive reporting for performance insights

Comprehensive Test Results

| System Prompt | Model | User Prompt | Similarity | Token Count | Cost(USD) | Time |

|---|---|---|---|---|---|---|

| AI assistant specialized in Ostorlab platform | gemini-2.0-flash-001 | how to do an android store scan? | 0.80 | 10,904 | $0.0027 | 13.06s |

| AI assistant specialized in Ostorlab platform | gpt-4o-mini | how to do an IOS store scan? | 0.77 | 12,245 | $0.0046 | 14.80s |

| Technical specialist providing precise guidance | gemini-2.0-flash-001 | how to do a web application scan? | 0.87 | 2,967 | $0.0007 | 3.33s |

| Technical specialist providing precise guidance | gpt-4o-mini | how to do a web application scan? | 0.86 | 3,108 | $0.0012 | 16.31s |

| Helpful assistant focused on simple language | gemini-2.0-flash-001 | how to scan multiple websites at once? | 0.38 | 2,810 | $0.0007 | 1.96s |

| Helpful assistant focused on simple language | gpt-4o-mini | how to scan multiple websites at once? | 0.81 | 5,047 | $0.0019 | 10.47s |

| Security-focused advisor emphasizing best practices | gemini-2.0-flash-001 | how to do an authenticated web scan? | 0.86 | 3,837 | $0.0010 | 10.24s |

| Security-focused advisor emphasizing best practices | gpt-4o-mini | how to do an authenticated web scan? | 0.78 | 13,199 | $0.0050 | 24.94s |

| Technical specialist providing precise guidance | gemini-2.0-flash-001 | list open tickets with their details | 0.72 | 6,927 | $0.0017 | 7.68s |

| Technical specialist providing precise guidance | gpt-4o-mini | list open tickets with their details | 0.79 | 10,518 | $0.0040 | 13.65s |

| Helpful assistant focused on simple language | gemini-2.0-flash-001 | show me p0 tickets | 0.69 | 5,898 | $0.0015 | 4.60s |

| Helpful assistant focused on simple language | gpt-4o-mini | show me my P0 tickets | 0.72 | 9,602 | $0.0037 | 7.84s |

Analysis of Results

Model Performance Characteristics

Gemini 2.0 Flash

- Average response time: 6.52s

- Average token usage: 4,724

- Total cost (USD): $0.0083

- Similarity score range: 0.38-0.87

- Best performance: Technical web scans (0.87)

- Weakest performance: Simple multi-website scanning (0.38)

GPT-4o Mini

- Average response time: 13.58s

- Average token usage: 10,633

- Total cost (USD): $0.0201

- Similarity score range: 0.72-0.86

- Best performance: Technical web scans (0.86)

- Most consistent: User-friendly scenarios (0.72-0.81)

Gemini Flash 2.0 (Google)

We attempted to use Gemini Flash 2.0, but the quality of the responses were not satisfactory.

Gemini Pro 2.0 Experimental (Google)

Similarly, Gemini Pro 2.0 Experimental (free) presented good results, by is limited by quota, restricting its usage.

Mistral: Ministral 8B

The Mistral: Ministral 8B model encountered errors when used with the tools, preventing successful runs.

Mistral: Ministral 3B

Like its larger counterpart, Mistral: Ministral 3B also raised errors because of tool-related issues.

Other GPT Models

We also explored o1 and o1-mini, DeepSeek, and Groq LLAMA 3.3 R1 Distill, but these models were either too expensive, too slow, or didn’t support tool calling or structured response.

Model Selection Strategy

Use Gemini for

- Technical documentation queries: Gemini excels at quickly processing technical web scans and documentation-related queries with high similarity scores, but struggles with more complex tasks. Pro version showed much better capabilities but is not yet available for production usage.

- Quick-response applications: With a faster average response time (6.52s), Gemini is suitable for applications where rapid responses are critical.

- Resource-constrained scenarios: Due to its lower token usage and cost efficiency, Gemini is ideal when system resources or budgets are limited.

- Security-focused documentation: Gemini’s best performance in technical scenarios makes it a good fit for security-related tasks or documentation that requires accuracy.

Use GPT-4o for

- User-facing interactions: GPT-4o’s consistency across user-friendly scenarios makes it a great choice for chatbots, customer service, or conversational AI.

- Complex, multi-step workflows: Its ability to handle large token usage and maintain high similarity scores ensures that GPT-4o can manage complex, multi-stage tasks reliably.

- Scenarios requiring consistency: With a narrower similarity score range (0.72-0.86), GPT-4o provides more predictable outputs, making it ideal for tasks where consistency is key.

- Natural language-heavy interactions: GPT-4o is well-suited for natural language processing tasks like story generation, report writing, or detailed user instructions, where fluency and clarity are essential.

Starting Simple: UI and API Development

When we started working on the fundamental building blocks: the user interface (UI) and the API, we had several options for how to implement the API, including WebSockets, GraphQL, and REST.

While WebSockets may seem like the best option due to their speed in streaming and real-time messaging, we ultimately chose GraphQL, as it was largely adopted in our platform and it allowed us to ship faster without any significant changes to the platform and existing infrastructure.

[Screenshot of the UI]

Structured Components UI

We experimented with using structured components to enhance the AI's ability to provide visually rich and interactive responses.

The concept of structured components involves the AI returning not just plain text or markdown answers but also structured UI elements like tables, images, and interactive components. Our goal was to improve the clarity and usability of the AI's responses.

Here’s an example of the expected structured components:

Agent Answer Format:

"message": {

"components": [

{

"type": "Text",

"content": "Step 1: Click the scans menu"

},

{

"type": "Image",

"url": "https://placehold.co/600x400/EEE/31343C"

},

{

"type": "Text",

"content": "Step 2: Click on 'Create a Scan'"

},

{

"type": "Table",

"data": {

"headers": ["Name", "ID"],

"rows": [...]

}

}

]

}

This approach would be extremely helpful for rendering complex information from AI responses, such as graphs, tables, and custom cards.

Unfortunately, gpt-4o-mini consistently defaulted to using a single text component, which contained everything in markdown format, instead of returning structured components. For instance:

"message": {

"components": [

{

"type": "Text",

"content": "Step 1: Click the scans menu \n ...."

}

]

}

This limitation prompted us to adapt our approach to UI rendering, ensuring better compatibility with the selected model while striving to maintain structured responses where possible.

Interactions with the Platform and tools calling

To enable the agent to interact with the platform—such as reading data or performing actions—we considered several approaches:

- Direct SQL Queries: This option was quickly dismissed due to concerns regarding security and stability.

- Using Existing APIs: Although this was a reasonable choice, it introduced performance overhead, which was not ideal for our needs.

- Custom Tools: Ultimately, the best approach was to develop custom tools that leverage our existing infrastructure. This method allows us to interact with the platform efficiently, enabling us to execute queries and actions as quickly as possible while maintaining full control over authorization.

Example of a Tool to List Tickets:

@security_agent.tool

def list_tickets(ctx,status: str):

return fetch_tickets_from_db(status)

Tools return type.

When developing tools, we had several options for the type of output they should provide: plain strings,

dicts, or Pydantic models. While returning plain strings might seem appealing, they are not maintainable.

Dictionaries are untyped, and language models have demonstrated a poor ability to understand them as outputs from tools. Therefore, the clear choice was to use Pydantic models.

Additionally, when working with tools, the docstring plays a crucial role in helping the AI agent understand what the tool is and how it functions.

Here's an example of a tool that returns a Pydantic model with a very clear docstring:

class TicketType(pydantic.BaseModel):

"""Model for a single ticket"""

id: int = pydantic.Field(None, description="Ticket identifier")

key: str = pydantic.Field(None, description="Ticket key in org-id format")

...

@security_agent.tool

def list_tickets(ctx,status: str) -> list[Ticket]:

"""List tickets based on the provided filters and context.

Retrieves ticket records matching the specified criteria. All filter parameters are optional

and can be combined to create complex queries.

Args:

...

"""

return fetch_tickets_from_db(status)

Handling errors

When working with the tools, we wanted to inform the LLM (Large Language Model) that it had called the tools with incorrect arguments. Raising exceptions was not a viable solution, as this would cause the entire workflow to fail.

Instead, we decided to use strings to indicate errors to the LLM, guiding it to correct its mistakes.

class TicketType(pydantic.BaseModel):

"""Model for a single ticket"""

id: int = pydantic.Field(None, description="Ticket identifier")

key: str = pydantic.Field(None, description="Ticket key in org-id format")

...

@security_agent.tool

def list_tickets(ctx,status: str) -> list[Ticket]:

"""List tickets based on the provided filters and context.

Retrieves ticket records matching the specified criteria. All filter parameters are optional

and can be combined to create complex queries.

Args:

...

"""

if status in ALLOWED_STATUS:

return fetch_tickets_from_db(status)

else:

return f"Error: invalid status was provided, status should be one off {ALLOWED_STATUS}"

Handling Large Data Sets

When dealing with large sets of data, such as potential nodes in an attack surface, we had to ensure the AI could efficiently process and retrieve relevant information. We addressed this by optimizing context length and implementing pagination mechanisms to handle vast amounts of information without exceeding model limits.

class TicketsType(pydantic.BaseModel):

"""Paginated tickets response type."""

total: int

page: int

tickets: list[TicketType]

@security_agent.tool

def list_tickets(ctx,status: str,page:int,count:int=10) -> TicketsType:

return fetch_tickets_from_db(status=status,count=count,page=page)

This allowed us to avoid passing too much or unnecessary data to the AI agent and to avoid exerting the context window of the used LLM model.

Data Retrieval for answering questions

To enhance Copilot’s ability to answer user questions that are related to the platform we need somehow to allow the agent to access information about the platform and what are the available features, luckily we have already done the work to document all of the features and how to use them, which is our documentation.

We used FAISS to index our entire documentation and provided it as a tool for the agent to search for details related to the user questions.

Initially, we decided to index images and videos alongside Markdown documentation to enable the model to generate visually rich responses. While this approach worked well in some cases, it also led to hallucinations. Upon further investigation, we found that images and videos lacked sufficient textual context, which confused the agent and led to inaccurate responses. Ultimately, we prioritized using only text-based indexing while selectively incorporating visuals where necessary.

Context-aware agent

When the user is interacting with the platform, it needs to know exactly what the user is seeing in terms of data and what the user is seeing, for example, if the user is inside a ticket page, the AI agent needs to be aware of where the user is and what he is seeing.

We did update our UI to have whenever we send a message to the agent we do append to it a context object that represents the user POC

{

"question": "What this ticket is about?",

"context": {

"page": "/remediation/tickets/os-11638",

"ticketKey": "os-11638"

}

}

Security implication

To ensure the AI agent operates within user permissions, we implemented strict authorization controls for all tools. Each tool executes with the same level of access and permissions as the requesting user, preventing unauthorized actions.

We used Python decorators to ensure tools could only be invoked by users with the necessary permissions:

@permissions.require_permission(PermissionAction.READ)

@security_agent.tool

def list_tickets(ctx, status: str) -> list:

return fetch_tickets_from_db(status)

The Agent tools also introduce a completely new category of input injection vectors that had to be tested and automated, but more on that later 🙂.

Conclusion

Implementing Ostorlab Copilot AI Agent has been an exciting and challenging journey. It is however not finished as we are already working on a plethora of new additions, from improved UI, reasoning, and advanced background interaction.

Through careful selection of frameworks, models, and integration methods, we have created a powerful and efficient assistant that enhances the user experience.

While challenges like structured UI responses and model inconsistencies remain, our iterative approach allows us to continuously improve Copilot's capabilities.

Acknowledgements

We would like to extend our heartfelt thanks to the following contributors who have worked tirelessly on the Ostorlab Copilot project:

- Adnane Serrar

- Anas Zouiten

- Mohamed El Yousfi

- Mouhcine Narhmouche

- Othmane Hachim

- Rabson Phiri

Their dedication and expertise have been invaluable in bringing this project to life.